Designing Near-Term Impact in a Legacy Healthcare Enrollment SystemA Design Science Case Study in Constraint-Driven Public Sector Systems

Overview

Role: UX Experience Associate Director (MU/DAI)

Client: Texas Medicaid & Healthcare Partnership (TMHP), via Accenture (state contractor)

Industry: Public Sector Healthcare · Government Technology

Engagement Type: RFP Strategy, UX Systems Design, Technical Specification

Focus: Human-Centered Process Redesign · Content Governance · AI-Ready Knowledge Systems

The broader engagement included two complementary workstreams: (1) a future-state vision for an online enrollment application, and (2) a near-term intervention strategy operating within current system constraints. This case study documents the latter, emphasizing immediate, actionable changes to reduce human error and process inefficiency.

Problem Context

Texas Medicaid’s provider enrollment process is among the most complex in the United States. At the time of this engagement, enrollment was largely paper-based, policy-dense, and manually processed, even for experienced providers. Many healthcare providers hired third-party specialists simply to navigate the application correctly TX Medicaid Provider Enrollment.

Applications were submitted on paper and manually entered into a legacy system by Accenture staff. This introduced error at multiple points:

providers misinterpreting forms or submit incomplete information

processors inconsistently interpreting policies during data entry

applications cycling through months of rejection and rework

call centers overwhelmed by clarification requests and escalations TX Medicaid Provider Enrollment…

Critically, there was no central source of truth:

provider-facing instructions conflicted across web pages, PDFs, and call scripts

internal processor documentation had no ownership model or review cycle

“shadow guidance” emerged informally, creating systemic inconsistency TX Medicaid Provider Enrollment…

Design Constraint

This was a constraint-heavy environment:

legacy infrastructure could not be replaced

digital research tooling did not yet exist

automation was not feasible in the short term

recommendations had to work within the existing system

The design challenge was therefore not “how do we redesign the system,” but:

How do we reduce error, confusion, and rework now, while creating a foundation for future automation later?

Research Approach

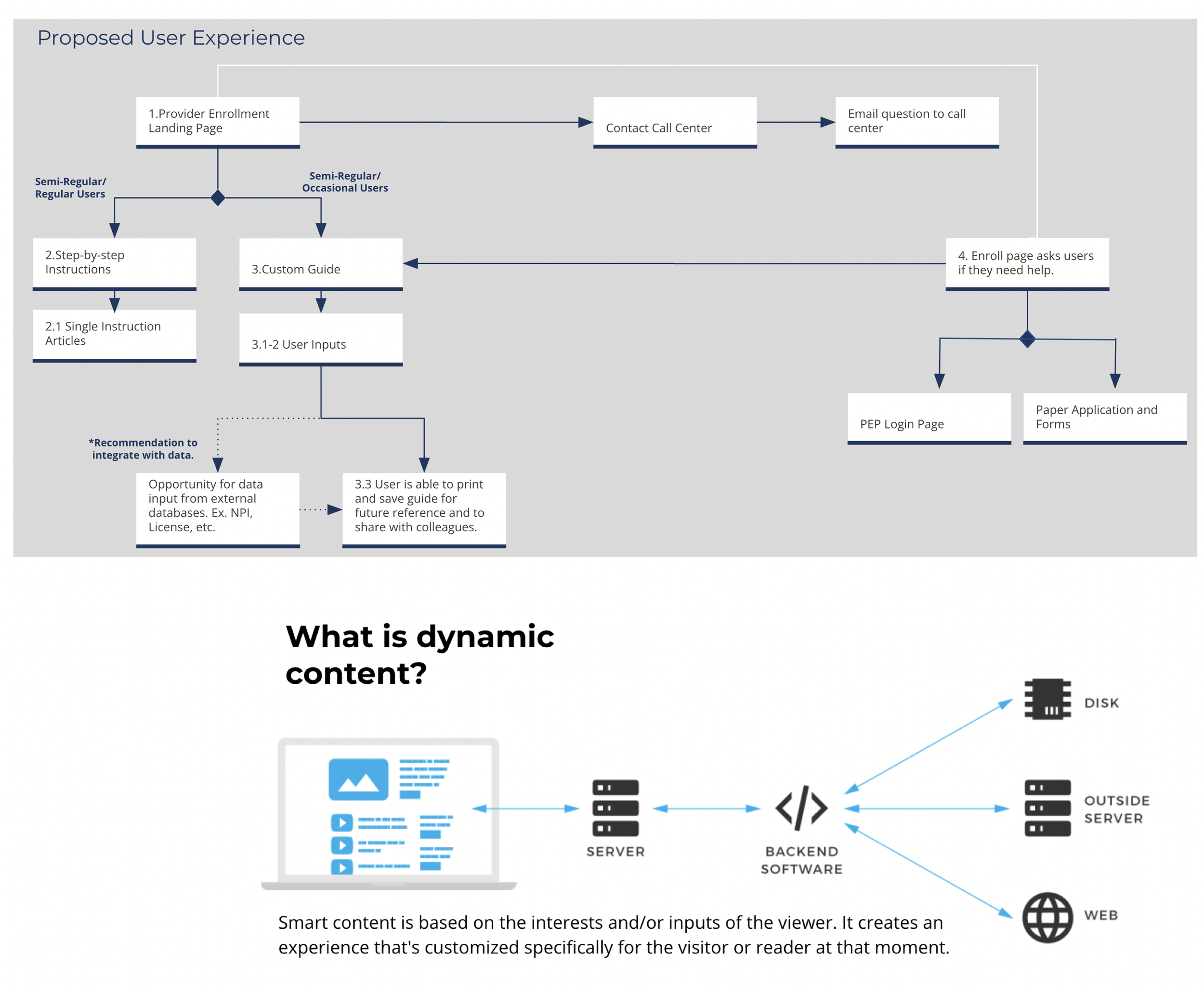

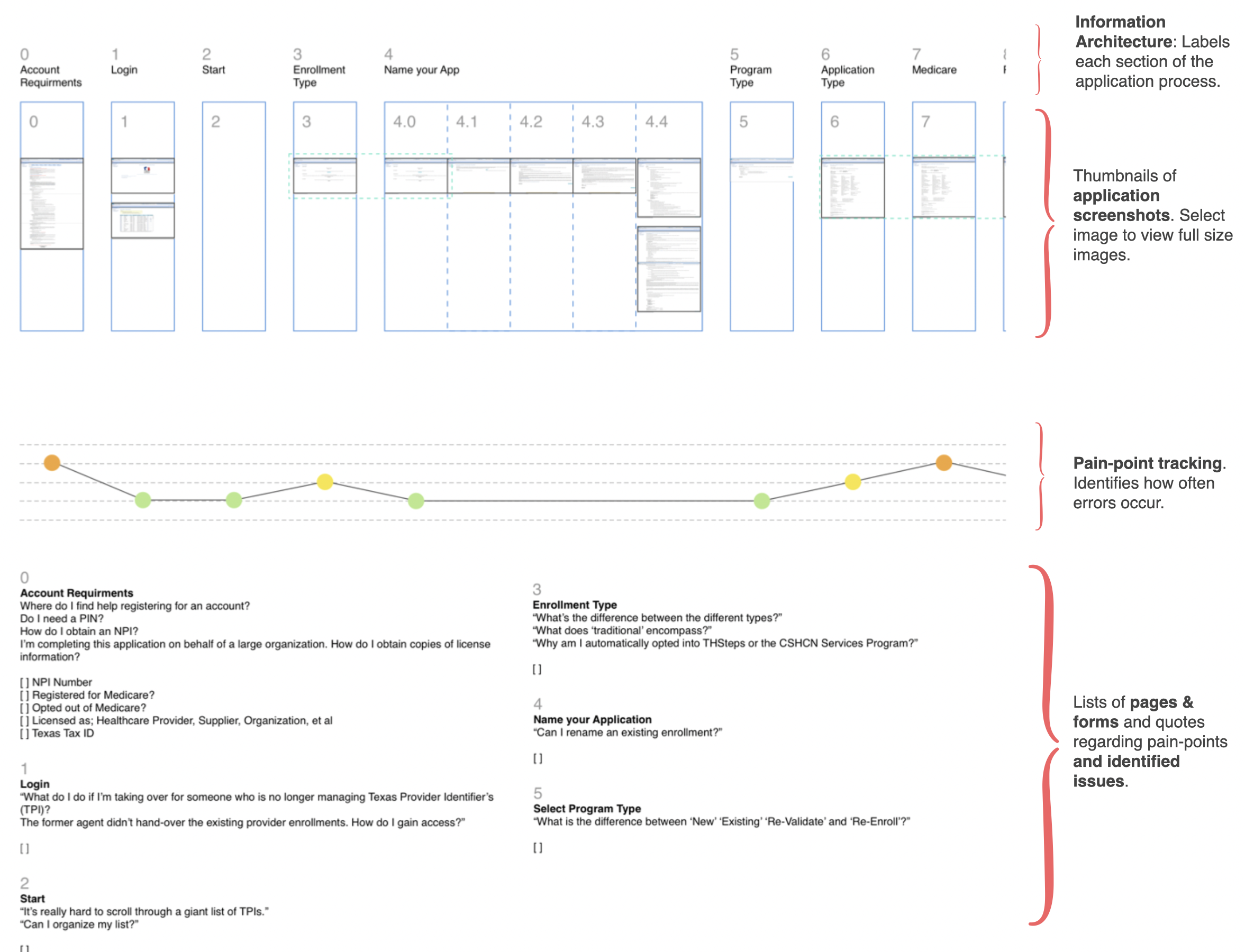

I used a mixed-methods, immersive research approach to understand how the system actually operated across roles, not how it was documented.

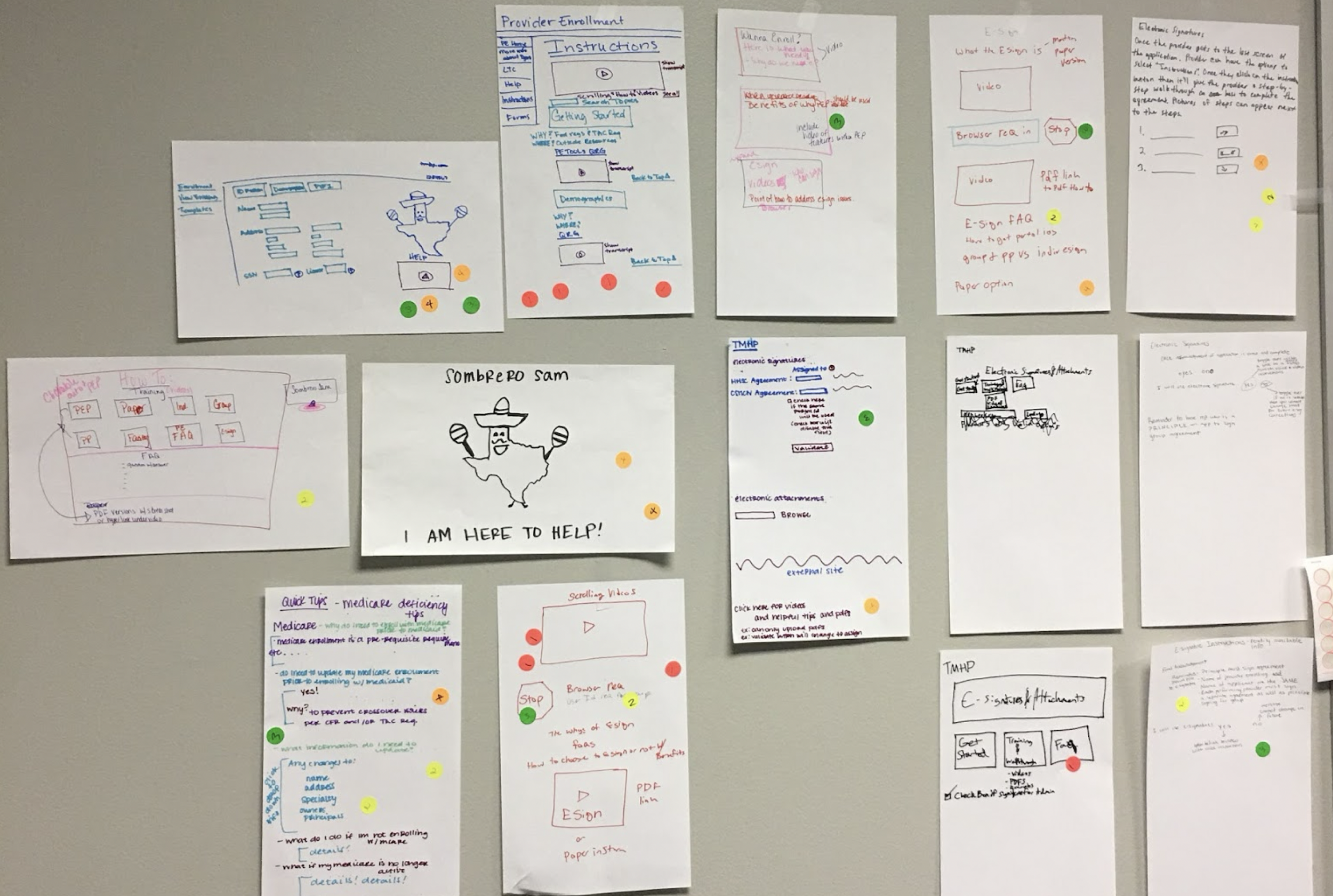

This space served as both a synthesis mechanism and a collaborative artifact, fostering alignment and trust among policy, processing, and support teams.

Methods

Shadowed Accenture processors entering paper applications into the legacy system, documenting error patterns, decision fatigue, and policy ambiguity

Conducted contextual interviews with processors and QA leads

Facilitated cognitive walkthroughs with contact center agents using real support calls

Reviewed, rejected, and escalated application packets to identify systemic patterns

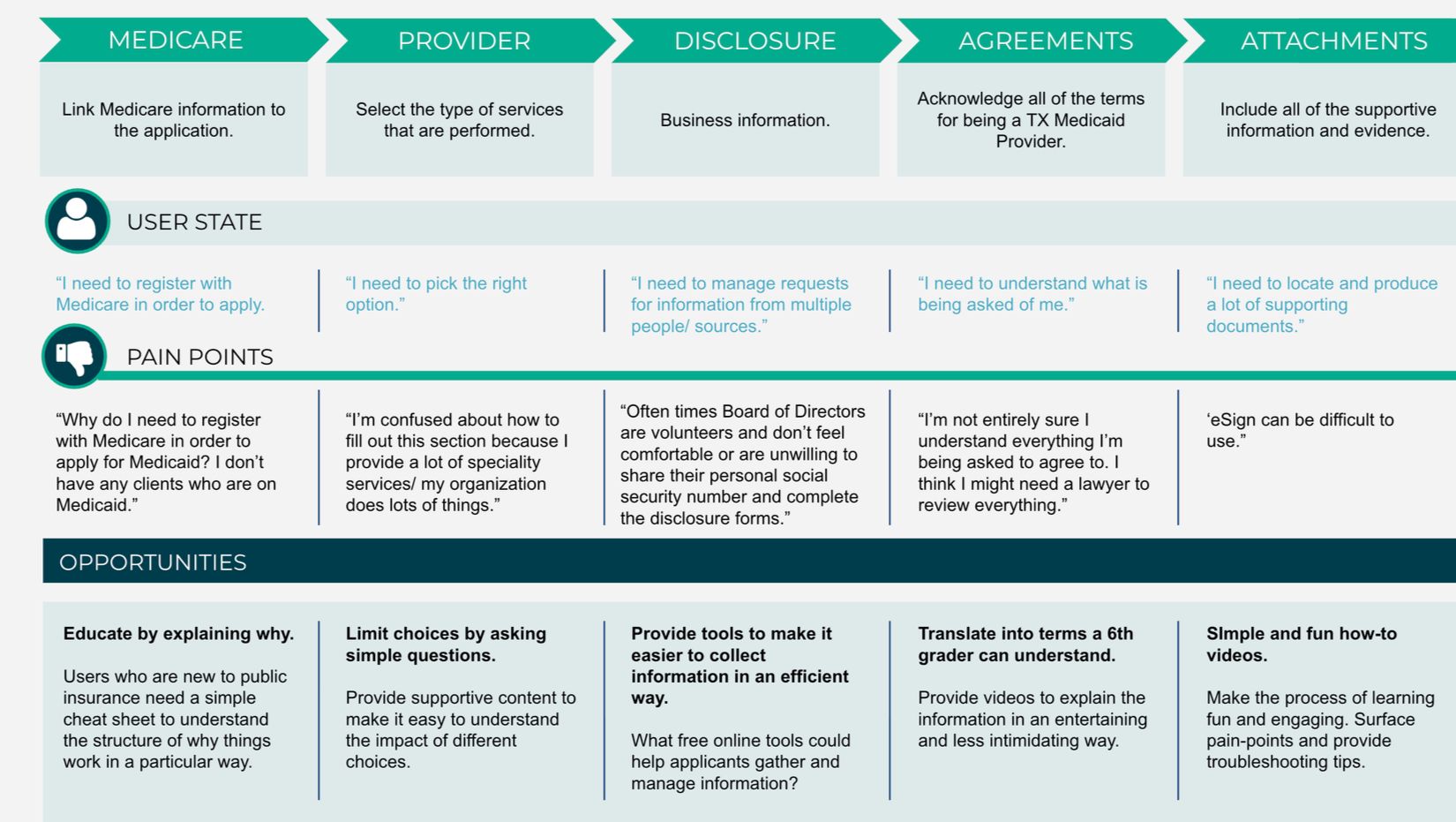

Mapped provider and processor journeys to surface rework loops and friction points

I created a physical “research war room” at the TMHP office:

manually transcribed interview notes, quotes, and form analysis onto Post-its

used affinity mapping and color-coded clustering to surface recurring breakdowns

invited TMHP stakeholders to walk the room with me, validating patterns and assumptions in real time

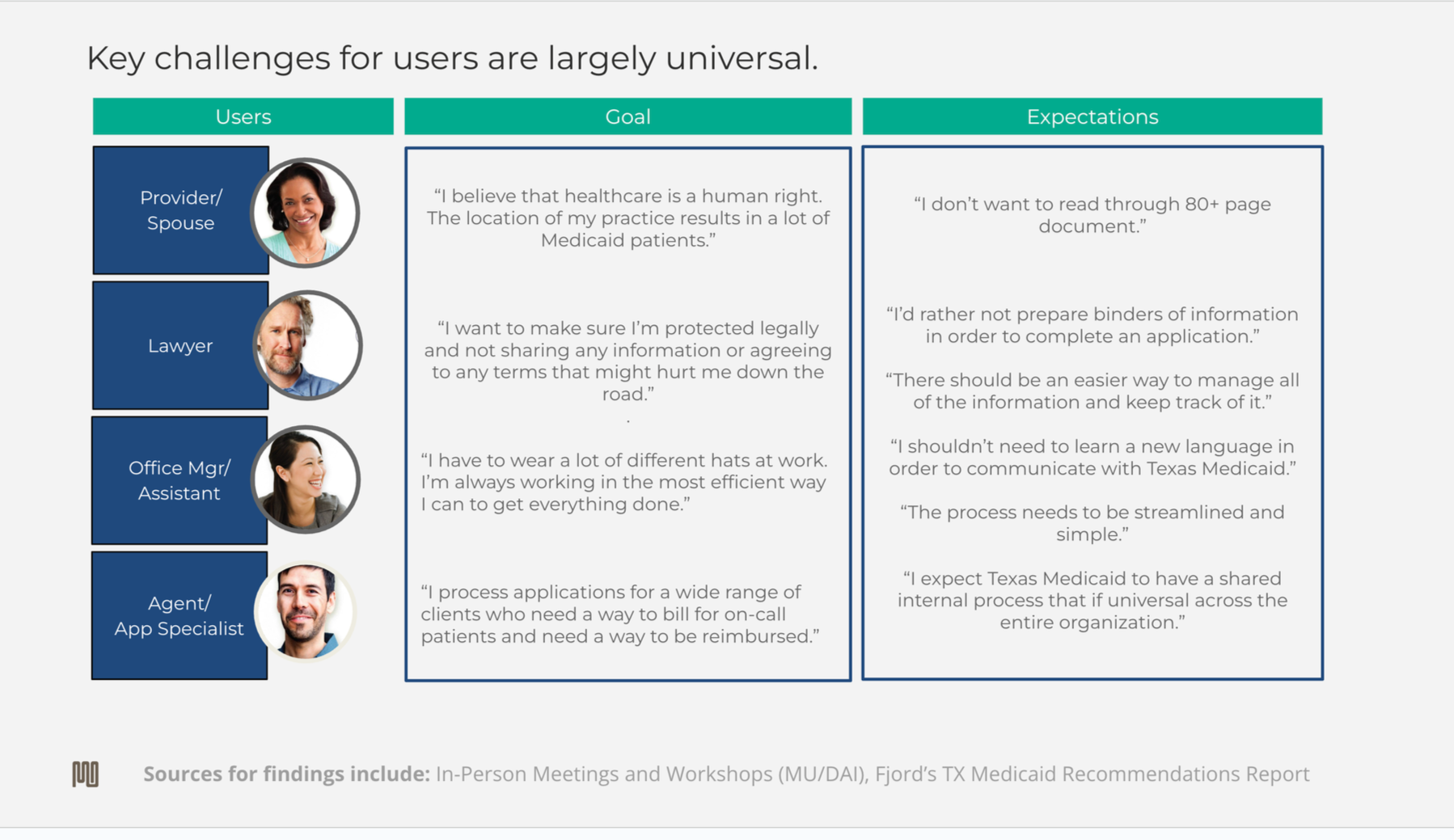

Operational KPI Analysis (Quantitative)

In addition to qualitative field research, this engagement incorporated quantitative analysis of operational performance data maintained by TMHP contractors (Accenture).

Method: Human KPI & Performance Data Analysis

I analyzed human performance metrics used by TMHP processing specialists to monitor enrollment throughput and support operations. These KPIs were not part of an automated analytics system; they were maintained through internal tracking tools and spreadsheets and reflected the realities of a legacy, paper-based workflow.

The metrics reviewed included:

Contact center resolution time

Volume and frequency of application rework

Application rejection and resubmission rates

Escalation patterns between processors and call center agents

Time-to-enrollment delays attributable to form errors or policy misinterpretation

These quantitative signals were used to:

Identify systemic bottlenecks rather than isolated usability issues

Correlate observed qualitative pain points with measurable operational impact

Validate where content ambiguity and process breakdowns were driving rework and escalation

Establish a baseline for improvement against which near-term recommendations could be evaluated

Because the data were manually entered and operationally constrained, the analysis focused on trend direction and pattern consistency rather than statistical precision. This approach was appropriate to the system’s maturity and avoided overconfidence in noisy or incomplete data.

Note: This image is illustrative and does not contain real project data. It is included to convey the sophistication of the data analyzed during the engagement.

Design Artifacts

Rather than proposing a full system overhaul, I designed intermediate artifacts that addressed immediate pain while enabling long-term evolution.

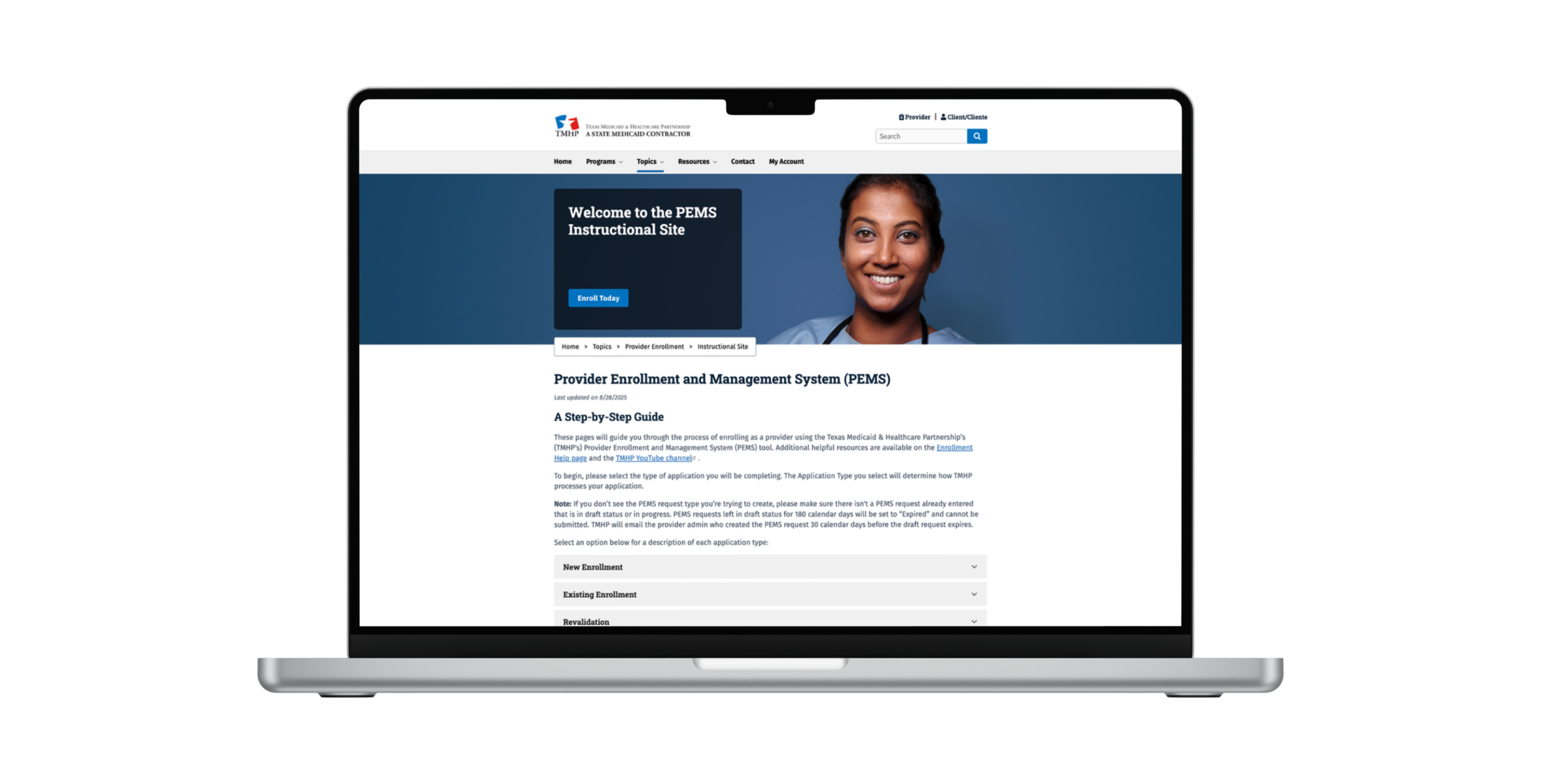

Artifact 1: Content Governance Framework (Foundation for Automation)

I proposed and initiated a content governance program that included:

a full inventory of public- and staff-facing content

ownership and review cycles aligned to policy changes

plain-language and UX writing standards

lightweight version control to prevent drift

a shared knowledge base structure for processors and call center agents

I also designed an information architecture and taxonomy that:

classified content by task, provider type, and policy area

enabled modular reuse across channels

supported future AI-powered retrieval and assistance TX Medicaid Provider Enrollment…

This reframed IA as infrastructure, not navigation.

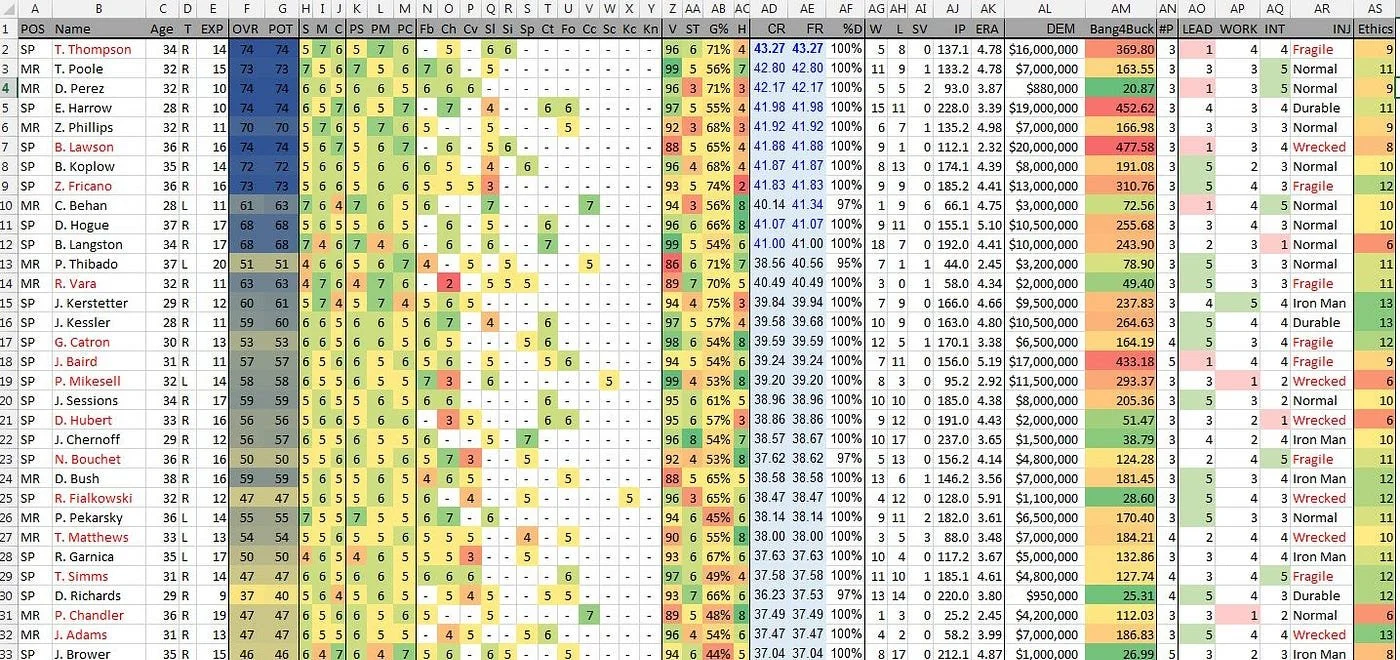

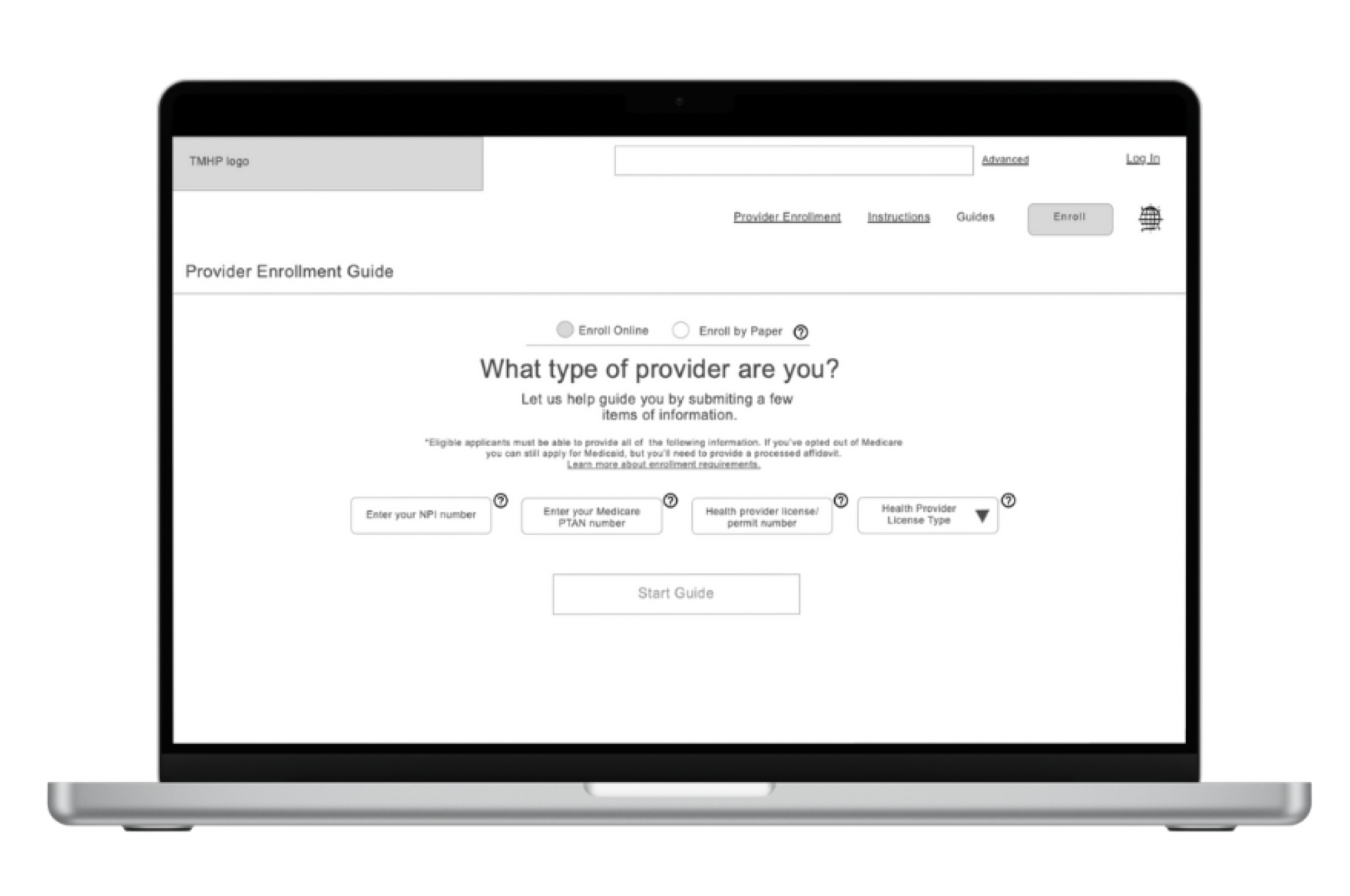

Artifact 2: Guided Support Tool (Logic-Based Decision Support)

To reduce errors before they enter the system, I designed and prototyped a guided support tool that:

asked providers a series of simple, logic-based questions

dynamically narrowed the scope of required forms and steps

produced a customized enrollment guide with only relevant content

reduced cognitive load and ambiguity at the point of submission

Example questions included:

What type of provider are you?

Will you bill Medicaid directly?

Are you part of a group practice?

The tool demonstrated how low-tech decision support could dramatically reduce error even within a paper-based system.

Core Insight

The most important finding was that many errors were not workflow failures, but content failures.

Inconsistent, outdated, and poorly governed content:

caused providers to submit incorrect applications

forced processors to rely on informal interpretations

drove repeated call center escalations

created cascading rework loops that no amount of training could fix TX Medicaid Provider Enrollment…

This reframed the problem:

Content was not a usability issue — it was a systemic blocker to transformation.

Without structured, governed, and policy-aligned content, neither efficiency nor automation was possible.

Evaluation & Outcomes

Although this was not a full system replacement, the interventions produced measurable impact:

Results

28% reduction in contact center resolution time

Decreased rework and application rejections

Improved provider confidence and processor efficiency

Strengthened alignment across policy, processing, and design

Established a foundation for scalable, AI-enabled knowledge systems TX Medicaid Provider Enrollment…

Feedback from providers and processors confirmed:

improved clarity

reduced uncertainty

increased confidence during submission

less reliance on call center escalation

Design Science Contribution

This project demonstrates several key design science contributions:

Constraint-Driven Innovation

Meaningful transformation can occur without ideal conditions if design focuses on structural leverage points.Content as System Infrastructure

Governed, modular, policy-aligned content is a prerequisite for automation — not a downstream optimization.Design as Knowledge Production

The artifacts produced (governance model, IA, guided tool) functioned as theory-in-use, not just deliverables.Human-Centered Foundations for AI

AI-ready systems begin with clarity, structure, and governance — not models.

What I Learned

This project reinforced a core principle of my design practice:

Transformation doesn’t require perfect systems — it requires clarity, collaboration, and artifacts that respect reality while preparing for the future.

By designing within constraints rather than around them, we moved an outdated public-sector process forward one deliberate step at a time — with impact that extended well beyond the immediate intervention